Once upon a time there was a farmer who had a tractor and a prize cow Betsy. Every time he began to milk Betsy, his tractor would turn on all by itself. People came from far and wide to see this. The farmer would start milking Betsy and the parked tractor magically turned on. To this day no one has been able to provide a logical explanation for the bizarre interaction between Betsy and the farmer’s tractor.

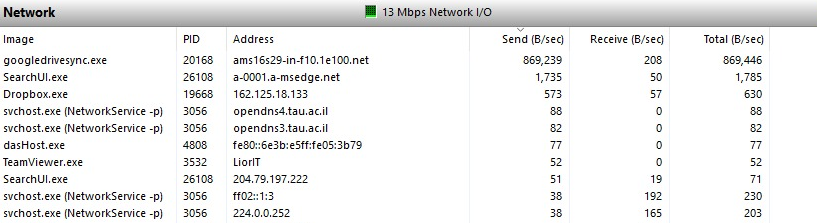

In August 2019, a number of researchers at Tel Aviv University complained to IUCC about poor upload performance when using the Google Drive File Stream client.

When using Google’s Chrome browser, upload performance was fine. We did a bit of research and learned that Chrome used UDP while Google Drive File Stream used TCP. Even with numerous pcaps, we still could not find what the issue was.

The researchers showed us that when moving to another ISP, upload performance was fine. Upload performance issues via Google Drive File Stream only occurred when using IUCC’s network. We checked all the components looking for a fault. We reviewed router configurations in the data path. We checked for any rate limiters or any other configuration option that could explain the upload throttling. We reviewed traceroutes. We eliminated any firewall or switch issue by directly connecting a test PC to a router port. We had our upstream provider, GÉANT, run numerous tests as well and we did a full code review on full router configurations. Yet we couldn’t find any explanation to why there was a problem with upload performance via Google Drive FS only from IUCC (AS378).

As a workaround, we asked the researchers to try rclone. This helped but lacked some of the necessary bells and whistles they needed from Google Drive File Stream.

So we kept searching. We considered that there may be a load balancing issue on the LACP bundles of our aggregated international links. So we scheduled late night maintenance windows – again – and stripped apart the LACP bundle and tested every single link by itself or in a bundle. We tried carving out a /24 from our network and letting some other ISP announce it to locations abroad. No go. We shut down our primary Israel-London link and let our traffic flow via our backup Israel-Frankfurt link. That didn’t help either.

As a last resort attempt at the end of the maintenance window, the NOC staff tried to shut off our GGC (Google Global Cache). In theory, this should have no interaction with uploads to individual Google Drives. (In fact, when we asked the staff afterward what made them think of shutting off the GGC when it wasn’t part of the maintenance plan, they answered that they had no idea except for the fact that it contained the word “Google.”) The GGC is not on the data path and, as with all GGC installations, it is located someplace inside the cloud of an ASN.

Bingo! Performance jumped from 2Mbytes/sec to 30Mbytes/sec. GGC improves performance for end users by providing cached pages of common Google pages locally inside an ISP.

We turned GGC back on, but we were unable to continue our maintenance window due to the Corona virus change freeze. We opened a ticket with Google. Their response: “This is most likely a coincidence – GGC does not handle uploads to Google services.”

Exactly what we thought!

But with some extra time on our hands during the Corona virus change freeze, we discovered a page called “configuring BGP page for GGC” that states:

Prefix advertisements to upstreams

You should ensure that user prefixes advertised to GGC nodes are also advertised upstream (at AS15169 peering, and via your transit providers), with the same prefix lengths.

Common misconfigurations, which can result in undesirable traffic flows, include:

” User prefixes seen at GGC, but missing from advertisements to AS15169 or transit

” User prefixes advertised more specifically at peering / transit than at GGC

The researcher who reported the issue was from Tel Aviv University. On our peering abroad (AS378), Tel Aviv University appears as 132.64.0.0/13. To GGC we announce it as 132.66.0.0/16. There’s no missing prefix to GGC and no more specific prefix to peering/transit. The only issue could possibly be that we announced a more specific prefix to the GGC than what appears via our transit.

So we decided to test modifying our announcement to the GGC, since it would not affect our users. Once we removed the /16 announcement to the GGC and matched the announcements exactly as specified on the Google support page, the performance issue with uploads to Google Drive File Stream disappeared!! Once the /16 announcement was removed from the GGC, performance on Google Drive File Stream uploads jumped from 2MBytes/sec to 30MBytes/sec.

Logically, there should be absolutely no interaction between GGC and uploads to Google Drive. That is what we thought and that is what Google told us was true. Numerous Google forums discuss these same performance issues from users all over the world and no one could find a satisfactory answer. Until now.

Then performance dropped again after a few days. Suddenly, IUCC received a ticket from GGC about our IPv6 announcements. The ticket claimed we were announcing overly-specific announcements to the GGC. Indeed we were announcing to abroad as well as to the GGC the number /64s in addition to our standard /32. The ticket indicated that the maximum prefix allowed to the GGC should be a /48. So we started along that path and decided to shutdown our IPv6 peer to the GGC. Google requested we restart it. Suddenly a new note indicating that maximum prefix allowed should be /56 for IPv6 appeared on the Google BGP configuration page for GGC. And on May 1 Google did some maintenance on our GGC cluster.

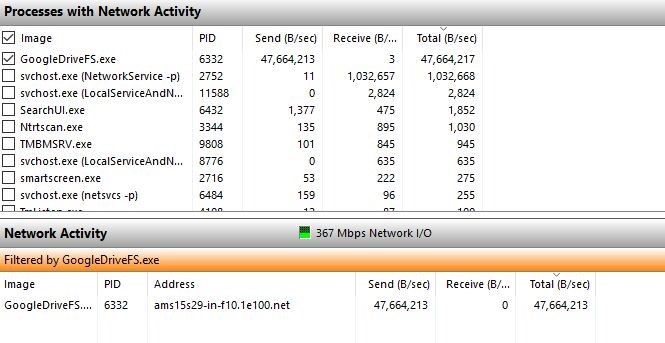

We can’t pinpoint exactly when – but performance for Google Drive File Stream uploads suddenly shot up to 47Mbytes/sec.

We can’t prove it, though we certainly tried. But we suspect that we have uncovered a mysterious relationship between Google Drive uploads and BGP/GGC. Chalk it up to Betsy and the tractor.